- Research

- Open access

- Published:

Interventions, methods and outcome measures used in teaching evidence-based practice to healthcare students: an overview of systematic reviews

BMC Medical Education volume 24, Article number: 306 (2024)

Abstract

Background

To fully implement the internationally acknowledged requirements for teaching in evidence-based practice, and support the student’s development of core competencies in evidence-based practice, educators at professional bachelor degree programs in healthcare need a systematic overview of evidence-based teaching and learning interventions. The purpose of this overview of systematic reviews was to summarize and synthesize the current evidence from systematic reviews on educational interventions being used by educators to teach evidence-based practice to professional bachelor-degree healthcare students and to identify the evidence-based practice-related learning outcomes used.

Methods

An overview of systematic reviews. Four databases (PubMed/Medline, CINAHL, ERIC and the Cochrane library) were searched from May 2013 to January 25th, 2024. Additional sources were checked for unpublished or ongoing systematic reviews. Eligibility criteria included systematic reviews of studies among undergraduate nursing, physiotherapist, occupational therapist, midwife, nutrition and health, and biomedical laboratory science students, evaluating educational interventions aimed at teaching evidence-based practice in classroom or clinical practice setting, or a combination. Two authors independently performed initial eligibility screening of title/abstracts. Four authors independently performed full-text screening and assessed the quality of selected systematic reviews using standardized instruments. Data was extracted and synthesized using a narrative approach.

Results

A total of 524 references were retrieved, and 6 systematic reviews (with a total of 39 primary studies) were included. Overlap between the systematic reviews was minimal. All the systematic reviews were of low methodological quality. Synthesis and analysis revealed a variety of teaching modalities and approaches. The outcomes were to some extent assessed in accordance with the Sicily group`s categories; “skills”, “attitude” and “knowledge”. Whereas “behaviors”, “reaction to educational experience”, “self-efficacy” and “benefits for the patient” were rarely used.

Conclusions

Teaching evidence-based practice is widely used in undergraduate healthcare students and a variety of interventions are used and recognized. Not all categories of outcomes suggested by the Sicily group are used to evaluate outcomes of evidence-based practice teaching. There is a need for studies measuring the effect on outcomes in all the Sicily group categories, to enhance sustainability and transition of evidence-based practice competencies to the context of healthcare practice.

Background

Evidence-based practice (EBP) enhances the quality of healthcare, reduces the cost, improves patient outcomes, empowers clinicians, and is recognized as a problem-solving approach [1] that integrates the best available evidence with clinical expertise and patient preferences and values [2]. A recent scoping review of EBP and patient outcomes indicates that EBPs improve patient outcomes and yield a positive return of investment for hospitals and healthcare systems. The top outcomes measured were length of stay, mortality, patient compliance/adherence, readmissions, pneumonia and other infections, falls, morbidity, patient satisfaction, patient anxiety/ depression, patient complications and pain. The authors conclude that healthcare professionals have a professional and ethical responsibility to provide expert care which requires an evidence-based approach. Furthermore, educators must become competent in EBP methodology [3].

According to the Sicily statement group, teaching and practicing EBP requires a 5-step approach: 1) pose an answerable clinical question (Ask), 2) search and retrieve relevant evidence (Search), 3) critically appraise the evidence for validity and clinical importance (Appraise), 4) applicate the results in practice by integrating the evidence with clinical expertise, patient preferences and values to make a clinical decision (Integrate), and 5) evaluate the change or outcome (Evaluate /Assess) [4, 5]. Thus, according to the World Health Organization, educators, e.g., within undergraduate healthcare education, play a vital role by “integrating evidence-based teaching and learning processes, and helping learners interpret and apply evidence in their clinical learning experiences” [6].

A scoping review by Larsen et al. of 81 studies on interventions for teaching EBP within Professional bachelor-degree healthcare programs (PBHP) (in English undergraduate/ bachelor) shows that the majority of EBP teaching interventions include the first four steps, but the fifth step “evaluate/assess” is less often applied [5]. PBHP include bachelor-degree programs characterized by combined theoretical education and clinical training within nursing, physiotherapy, occupational therapy, radiography, and biomedical laboratory students., Furthermore, an overview of systematic reviews focusing on practicing healthcare professionals EBP competencies testifies that although graduates may have moderate to high level of self-reported EBP knowledge, skills, attitudes, and beliefs, this does not translate into their subsequent EBP implementation [7]. Although this cannot be seen as direct evidence of inadequate EBP teaching during undergraduate education, it is irrefutable that insufficient EBP competencies among clinicians across healthcare disciplines impedes their efforts to attain highest care quality and improved patient outcomes in clinical practice after graduation.

Research shows that teaching about EBP includes different types of modalities. An overview of systematic reviews, published by Young et al. in 2014 [8] and updated by Bala et al. in 2021 [9], synthesizes the effects of EBP teaching interventions including under- and post graduate health care professionals, the majority being medical students. They find that multifaceted interventions with a combination of lectures, computer lab sessions, small group discussion, journal clubs, use of current clinical issues, portfolios and assignments lead to improvement in students’ EBP knowledge, skills, attitudes, and behaviors compared to single interventions or no interventions [8, 9]. Larsen et al. find that within PBHP, collaboration with clinical practice is the second most frequently used intervention for teaching EBP and most often involves four or all five steps of the EBP teaching approach [5]. The use of clinically integrated teaching in EBP is only sparsely identified in the overviews by Young et al. and Bala et al. [8, 9]. Therefore, the evidence obtained within Bachelor of Medicine which is a theoretical education [10], may not be directly transferable for use in PBHP which combines theoretical and mandatory clinical education [11].

Since the overview by Young et al. [8], several reviews of interventions for teaching EBP used within PBHP have been published [5, 12,13,14].

We therefore wanted to explore the newest evidence for teaching EBP focusing on PBHP as these programs are characterized by a large proportion of clinical teaching. These healthcare professions are certified through a PBHP at a level corresponding to a University Bachelor Degree, but with strong focus on professional practice by combining theoretical studies with mandatory clinical teaching. In Denmark, almost half of PBHP take place in clinical practice. These applied science programs qualify “the students to independently analyze, evaluate and reflect on problems in order to carry out practice-based, complex, and development-oriented job functions" [11]. Thus, both the purpose of these PBHP and the amount of clinical practice included in the educations contrast with for example medicine.

Thus, this overview, identifies the newest evidence for teaching EBP specifically within PBHP and by including reviews using quantitative and/or qualitative methods.

We believe that such an overview is important knowledge for educators to be able to take the EBP teaching for healthcare professions to a higher level. Also reviewing and describing EBP-related learning outcomes, categorizing them according to the seven assessment categories developed by the Sicily group [2], will be useful knowledge to educators in healthcare professions. These seven assessment categories for EBP learning including: Reaction to the educational experience, attitudes, self-efficacy, knowledge, skills, behaviors and benefits to patients, can be linked to the five-step EBP approach. E.g., reactions to the educational experience: did the educators teaching style enhance learners’ enthusiasm for asking questions? (Ask), self-efficacy: how well do learners think they critically appraise evidence? (Appraise), skills: can learners come to a reasonable interpretation of how to apply the evidence? (Integrate) [2]. Thus, this set of categories can be seen as a basic set of EBP-related learning outcomes to classify the impact from EBP educational interventions.

Purpose and review questions

A systematic overview of which evidence-based teaching interventions and which EBP-related learning outcomes that are used will give teachers access to important knowledge on what to implement and how to evaluate EBP teaching.

Thus, the purpose of this overview is to synthesize the latest evidence from systematic reviews about EBP teaching interventions in PBHP. This overview adds to the existing evidence by focusing on systematic reviews that a) include qualitative and/ or quantitative studies regardless of design, b) are conducted among PBHP within nursing, physiotherapy, occupational therapy, midwifery, nutrition and health and biomedical laboratory science, and c) incorporate the Sicily group's 5-step approach and seven assessment categories when analyzing the EBP teaching interventions and EBP-related learning outcomes.

The questions of this overview of systematic reviews are:

-

1)

Which educational interventions are described and used by educators to teach EBP to Professional Bachelor-degree healthcare students?

-

2)

What EBP-related learning outcomes have been used to evaluate teaching interventions?

Methods

The study protocol was guided by the Cochrane Handbook on Overviews of Reviews [15] and the review process was reported in accordance with The Preferred Reporting Items for Systematic Reviews and Meta-analyses (PRISMA) statement [16] when this was consistent with the Cochrane Handbook.

Inclusion criteria

Eligible reviews fulfilled the inclusion criteria for publication type, population, intervention, and context (see Table 1). Failing a single inclusion criterion implied exclusion.

Search strategy

On January 25th 2024 a systematic search was conducted in; PubMed/Medline, CINAHL (EBSCOhost), ERIC (EBSCOhost) and the Cochrane library from May 2013 to January 25th, 2024 to identify systematic reviews published after the overview by Young et al. [8]. In collaboration with a research librarian, a search strategy of controlled vocabulary and free text terms related to systematic reviews, the student population, teaching interventions, teaching context, and evidence-based practice was developed (see Additional file 1). For each database, the search strategy was peer reviewed, revised, modified and subsequently pilot tested. No language restrictions were imposed.

To identify further eligible reviews, the following methods were used: Setting email alerts from the databases to provide weekly updates on new publications; backward and forward citation searching based on the included reviews by screening of reference lists and using the “cited by” and “similar results” function in PubMed and CINAHL; broad searching in Google Scholar (Advanced search), Prospero, JBI Evidence Synthesis and the OPEN Grey database; contacting experts in the field via email to first authors of included reviews, and by making queries via Twitter and Research Gate on any information on unpublished or ongoing reviews of relevance.

Selection and quality appraisal process

Database search results were merged, duplicate records were removed, and title/abstract were initially screened via Covidence [17]. The assessment process was pilot tested by four authors independently assessing eligibility and methodological quality of one potential review followed by joint discussion to reach a common understanding of the criteria used. Two authors independently screened each title/abstract for compliance with the predefined eligibility criteria. Disagreements were resolved by a third author. Four authors were paired for full text screening, and each pair assessed independently 50% of the potentially relevant reviews for eligibility and methodological quality.

For quality appraisal, two independent authors used the AMSTAR-2 (A MeaSurement Tool to Assess systematic Reviews) for reviews including intervention studies [18] and the Joanna Briggs Institute Checklist for systematic reviews and research Synthesis (JBI checklist) [19] for reviews including both quantitative and qualitative or only qualitative studies. Uncertainties in assessments were resolved by requesting clarifying information from first authors of reviews and/or discussion with co-author to the present overview.

Overall methodological quality for included reviews was assessed using the overall confidence criteria of AMSTAR 2 based on scorings in seven critical domains [18] appraised as high (none or one non-critical flaw), moderate (more than one non-critical flaw), low (one critical weakness) or critically low (more than one critical weakness) [18]. For systematic reviews of qualitative studies [13, 20, 21] the critical domains of the AMSTAR 2, not specified in the JBI checklist, were added.

Data extraction and synthesis process

Data were initially extracted by the first author, confirmed or rejected by the last author and finally discussed with the whole author group until consensus was reached.

Data extraction included 1) Information about the search and selection process according to the PRISMA statement [16, 22], 2) Characteristics of the systematic reviews inspired by a standard in the Cochrane Handbook (15), 3) A citation index inspired by Young et al. [8] used to illustrate overlap of primary studies in the included systematic reviews, and to ensure that data from each primary study were extracted only once [15], 4) Data on EBP teaching interventions and EBP-related outcomes. These data were extracted, reformatted (categorized inductively into two categories: “Collaboration interventions” and “ Educational interventions”) and presented as narrative summaries [15]. Data on outcome were categorized according to the seven assessment categories, defined by the Sicily group, to classify the impact from EBP educational interventions: Reaction to the educational experience, attitudes, self-efficacy, knowledge, skills, behaviors and benefits to patients [2]. When information under points 3 and 4 was missing, data from the abstracts of the primary study articles were reviewed.

Results

Results of the search

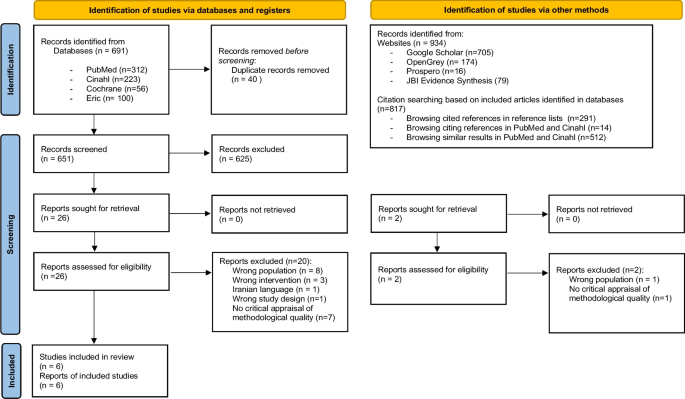

The database search yielded 691 references after duplicates were removed. Title and abstract screening deemed 525 references irrelevant. Searching via other methods yielded two additional references. Out of 28 study reports assessed for eligibility 22 were excluded, leaving a total of six systematic reviews. Screening resulted in 100% agreement among the authors. Figure 1 details the search and selection process. Reviews that might seem relevant but did not meet the eligibility criteria [15], are listed in Additional file 2. One protocol for a potentially relevant review was identified as ongoing [23].

Characteristics of included systematic reviews and overlap between them

The six systematic reviews originated from the Middle East, Asia, North America, Europe, Scandinavia, and Australia. Two out of six reviews did not identify themselves as systematic reviews but did fulfill this eligibility criteria [12, 20]. All six represented a total of 64 primary studies and a total population of 6649 students (see Table 2). However, five of the six systematic reviews contained a total of 17 primary studies not eligible to our overview focus (e.g., postgraduate students) (see Additional file 3). Results from these primary studies were not extracted. Of the remaining primary studies, six were included in two, and one was included in three systematic reviews. Data from these studies were extracted only once to avoid double-counting. Thus, the six systematic reviews represented a total of 39 primary studies and a total population of 3394 students. Nursing students represented 3280 of these. One sample of 58 nutrition and health students and one sample of 56 mixed nursing and midwife students were included but none from physiotherapy, occupational therapy, or biomedical laboratory scientists. The majority (n = 28) of the 39 primary studies had a quantitative design whereof 18 were quasi-experimental (see Additional file 4).

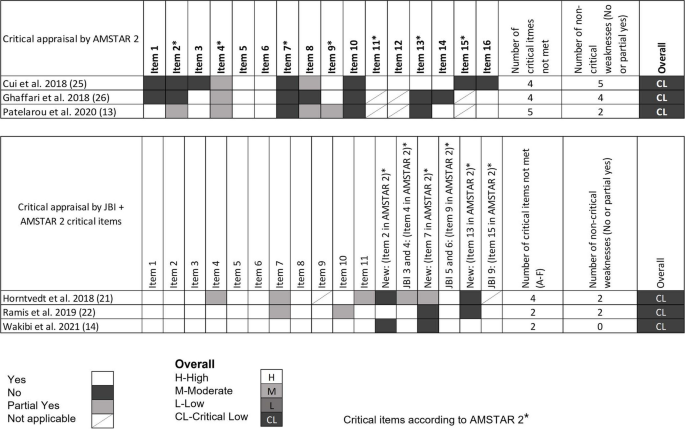

Quality of systematic review

All the included systematic reviews were assessed as having critically low quality with 100% concordance between the two designed authors (see Fig. 2) [18]. The main reasons for the low quality of the reviews were a) not demonstrating a registered protocol prior to the review [13, 20, 24, 25], b) not providing a list of excluded studies with justification for exclusion [12, 13, 21, 24, 25] and c) not accounting for the quality of the individual studies when interpreting the result of the review [12, 20, 21, 25].

Overall methodological quality assessment for systematic reviews. Quantitative studies [12, 24, 25] were assessed following the AMSTAR 2 critical domain guidelines. Qualitative studies [13, 20, 21] were assessed following the JBI checklist. For overall classification, qualitative studies were also assessed with the following critical AMSTAR 2 domains not specified in the JBI checklist (item 2. is the protocol registered before commencement of the review, item 7. justification for excluding individual studies and item 13. consideration of risk of bias when interpreting the results of the review)

Missing reporting of sources of funding for primary studies and not describing the included studies in adequate detail were, most often, the two non-critical items of the AMSTAR 2 and the JBI checklist, not met.

Most of the included reviews did report research questions including components of PICO, performed study selection and data extraction in duplicate, used appropriate methods for combining studies and used satisfactory techniques for assessing risk of bias (see Fig. 2).

Main findings from the systematic reviews

As illustrated in Table 2, this overview synthesizes evidence on a variety of approaches to promote EBP teaching in both classroom and clinical settings. The systematic reviews describe various interventions used for teaching in EBP, which can be summarized into two themes: Collaboration Interventions and Educational Interventions.

Collaboration interventions to teach EBP

In general, the reviews point that interdisciplinary collaboration among health professionals and/or others e.g., librarian and professionals within information technologies is relevant when planning and teaching in EBP [13, 20].

Interdisciplinary collaboration was described as relevant when planning teaching in EBP [13, 20]. Specifically, regarding literature search Wakibi et al. found that collaboration between librarians, computer laboratory technicians and nurse educators enhanced students’ skills [13]. Also, in terms of creating transfer between EBP teaching and clinical practice, collaboration between faculty, library, clinical institutions, and teaching institutions was used [13, 20].

Regarding collaboration with clinical practice, Ghaffari et al. found that teaching EBP integrated in clinical education could promote students’ knowledge and skills [25]. Horntvedt et al. found that during a six-week course in clinical practice, students obtained better skills in reading research articles and orally presenting the findings to staff and fellow students [20]. Participation in clinical research projects combined with instructions in analyzing and discussing research findings also “led to a positive approach and EBP knowledge” [20]. Moreover, reading research articles during the clinical practice period enhances the students critical thinking skills. Furthermore, Horntvedt et al. mention, that students found it meaningful to conduct a “mini” – research project in clinical settings, as the identified evidence became relevant [20].

Educational interventions

Educational interventions can be described as “Framing Interventions” understood as different ways to set up a framework for teaching EBP, and “ Teaching methods” understood as specific methods used when teaching EBP.

Various educational interventions were described in most reviews [12, 13, 20, 21]. According to Patelarou et al., no specific educational intervention regardless of framing and methods was in favor to “increase knowledge, skills and competency as well as improve the beliefs, attitudes and behaviors of nursing students” [12].

Framing interventions

The approaches used to set up a framework for teaching EBP were labelled in different ways: programs, interactive teaching strategies, educational programs, courses etc. Approaches of various durations from hours to months were described as well as stepwise interventions [12, 13, 20, 21, 24, 25].

Some frameworks [13, 20, 21, 24] were based on the assessments categories described by the Sicily group [2] or based on theory [21] or as mentioned above clinically integrated [20]. Wakibi et al. identified interventions used to foster a spirit of inquiry and EBP culture reflecting the “5-step approach” of the Sicily group [4], asking PICOT questions, searching for best evidence, critical appraisal, integrating evidence with clinical expertise and patient preferences to make clinical decisions, evaluating outcomes of EBP practice, and disseminating outcomes useful [13]. Ramis et al. found that teaching interventions based on theory like Banduras self-efficacy or Roger’s theory of diffusion led to positive effects on students EBP knowledge and attitudes [21].

Teaching methods

A variety of teaching methods were used such as, lectures [12, 13, 20], problem-based learning [12, 20, 25], group work, discussions [12, 13], and presentations [20] (see Table 2). The most effective method to achieve the skills required to practice EBP as described in the “5-step approach” by the Sicely group is a combination of different teaching methods like lectures, assignments, discussions, group works, and exams/tests.

Four systematic reviews identified such combinations or multifaceted approaches [12, 13, 20, 21]. Patelarou et al. states that “EBP education approaches should be blended” [12]. Thus, combining the use of video, voice-over, PowerPoint, problem-based learning, lectures, team-based learning, projects, and small groups were found in different studies. This combination had shown “to be effective” [12]. Similarly, Horntvedt et al. found that nursing students reported that various teaching methods improved their EBP knowledge and skills [20].

According to Ghaffari et al., including problem-based learning in teaching plans “improved the clinical care and performance of the students”, while the problem-solving approach “promoted student knowledge” [25]. Other teaching methods identified, e.g., flipped classroom [20] and virtual simulation [12, 20] were also characterized as useful interactive teaching interventions. Furthermore, face-to-face approaches seem “more effective” than online teaching interventions to enhance students’ research and appraisal skills and journal clubs enhance the students critically appraisal-skills [12].

As the reviews included in this overview primarily are based on qualitative, mixed methods as well as quasi-experimental studies and to a minor extent on randomized controlled trials (see Table 2) it is not possible to conclude of the most effective methods. However, a combination of methods and an innovative collaboration between librarians, information technology professionals and healthcare professionals seem the most effective approach to achieve EBP required skills.

EBP-related outcomes

Most of the systematic reviews presented a wide array of outcome assessments applied in EBP research (See Table 3). Analyzing the outcomes according to the Sicily group’s assessment categories revealed that assessing “knowledge” (used in 19 out of 39 primary studies), “skills” (used in 18 out of 39 primary studies) and “attitude” (used in 17 out of 39) were by far the most frequently used assessment categories, whereas outcomes within the category of “behaviors” (used in eight studies) “reaction to educational experience” (in five studies), “self-efficacy” (in two studies), and “benefits for the patient” (in one study), were used to a far lesser extent. Additionally, outcomes, that we were not able to categorize within the seven assessment categories, were “future use” and “Global EBP competence”.

Discussion

The purpose of this overview of systematic reviews was to collect and summarize evidence of the diversity of EBP teaching interventions and outcomes measured among professional bachelor- degree healthcare students.

Our results give an overview of “the state of the art” of using and measuring EBP in PBHP education. However, the quality of included systematic reviews was rated critically low. Thus, the result cannot support guidelines of best practice.

The analysis of the interventions and outcomes described in the 39 primary studies included in this overview, reveals a wide variety of teaching methods and interventions being used and described in the scientific literature on EBP teaching of PBHP students. The results show some evidence of the five step EBP approach in accordance with the inclusion criteria “interventions aimed at teaching one or more of the five EBP steps; Ask, Search, Appraise, Integrate, Assess/evaluate”. Most authors state, that the students´ EBP skills, attitudes and knowledge improved by almost any of the described methods and interventions. However, descriptions of how the improvements were measured were less frequent.

We evaluated the described outcome measures and assessments according to the seven categories proposed by the Sicily group and found that most assessments were on “attitudes”, “skills” and “knowledge”, sometimes on “behaviors” and very seldom on” reaction to educational experience”, “self-efficacy” and “benefits to the patients”. To our knowledge no systematic review or overview has made this evaluation on outcome categories before, but Bala et al. [9] also stated that knowledge, skills, and attitudes are the most common evaluated effects.

Comparing the outcomes measured between mainly medical [9] and nursing students, the most prevalent outcomes in both groups are knowledge, skills and attitudes around EBP. In contrast, measuring on the students´ patient care or on the impact of the EBP teaching on benefits for the patients is less prevalent. In contrast Wu et al.’s systematic review shows that among clinical nurses, educational interventions supporting implementation of EBP projects can change patient outcomes positively. However, they also conclude that direct causal evidence of the educational interventions is difficult to measure because of the diversity of EBP projects implemented [26]. Regarding EBP behavior the Sicily group recommend this category to be assessed by monitoring the frequency of the five step EBP approach, e.g., ASK questions about patients, APPRAISE evidence related to patient care, EVALUATE their EBP behavior and identified areas for improvement [2]. The results also showed evidence of student-clinician transition. “Future use” was identified in two systematic reviews [12, 13] and categorized as “others”. This outcome is not included in the seven Sicily categories. However, a systematic review of predictive modelling studies shows, that future use or the intention to use EBP after graduation are influenced by the students EBP familiarity, EBP capability beliefs, EBP attitudes and academic and clinical support [27].

Teaching and evaluating EBP needs to move beyond aiming at changes in knowledge, skills, and attitudes, but also start focusing on changing and assessing behavior, self-efficacy and benefit to the patients. We recommend doing this using validated tools for the assessment of outcomes and in prospective studies with longer follow-up periods, preferably evaluating the adoption of EBP in clinical settings bearing in mind, that best teaching practice happens across sectors and settings supported and supervised by multiple professions.

Based on a systematic review and international Delphi survey, a set of interprofessional EBP core competencies that details the competence content of each of the five steps has been published to inform curriculum development and benchmark EBP standards [28]. This consensus statement may be used by educators as a reference for both learning objectives and EBP content descriptions in future intervention research. The collaboration with clinical institutions and integration of EBP teaching components such as EBP assignments or participating in clinical research projects are important results. Specifically, in the light of the dialectic between theoretical and clinical education as a core characteristic of Professional bachelor-degree healthcare educations.

Our study has some limitations that need consideration when interpreting the results. A search in the EMBASE and Scopus databases was not added in the search strategy, although it might have been able to bring additional sources. Most of the 22 excluded reviews included primary studies among other levels/ healthcare groups of students or had not critically appraised their primary studies. This constitutes insufficient adherence to methodological guidelines for systematic reviews and limits the completeness of the reviews identified. Often, the result sections of the included reviews were poorly reported and made it necessary to extract some, but not always sufficient, information from the primary study abstracts. As the present study is an overview and not a new systematic review, we did not extract information from the result section in the primary studies. Thus, the comprehensiveness and applicability of the results of this overview are limited by the methodological limitations in the six included systematic reviews.

The existing evidence is based on different types of study designs. This heterogeneity is seen in all the included reviews. Thus, the present overview only conveys trends around the comparative effectiveness of the different ways to frame, or the methods used for teaching EBP. This can be seen as a weakness for the clarity and applicability of the overview results. Also, our protocol is unpublished, which may weaken the transparency of the overview approach, however our search strategies are available as additional material (see Additional file 1). In addition, the validity of data extraction can be discussed. We extracted data consecutively by the first and last author and if needed consensus was reached by discussion with the entire research group. This method might have been strengthened by using two blinded reviewers to extract data and present data with supporting kappa values.

The generalizability of the results of this overview is limited to undergraduate nursing students. Although, we consider it a strength that the results represent a broad international perspective on framing EBP teaching, as well as teaching methods and outcomes used among educators in EBP. Primary studies exist among occupational therapy and physiotherapy students [5, 29] but have not been systematically synthesized. However, the evidence is almost non-existent among midwife, nutrition and health and biomedical laboratory science students. This has implications for further research efforts because evidence from within these student populations is paramount for future proofing the quality assurance of clinical evidence-based healthcare practice.

Another implication is the need to compare how to frame the EBP teaching, and the methods used both inter-and mono professionally among these professional bachelor-degree students. Lastly, we support the recommendations of Bala et al. of using validated tools to increase the focus on measuring behavior change in clinical practice and patient outcomes, and to report in accordance with the GREET guidelines for educational intervention studies [9].

Conclusion

This overview demonstrates a variety of approaches to promote EBP teaching among professional bachelor-degree healthcare students. Teaching EBP is based on collaboration with clinical practice and the use of different approaches to frame the teaching as well as different teaching methods. Furthermore, this overview has elucidated, that interventions often are evaluated according to changes in the student’s skills, knowledge and attitudes towards EBP, but very rarely on self-efficacy, behaviors, benefits to the patients or reaction to the educational experience as suggested by the Sicily group. This might indicate that educators need to move on to measure the effect of EBP on outcomes comprising all categories, which are important to enhance sustainable behavior and transition of knowledge into the context of practices where better healthcare education should have an impact. In our perspective these gaps in the EBP teaching are best met by focusing on more collaboration with clinical practice which is the context where the final endpoint of teaching EBP should be anchored and evaluated.

Availability of data and materials

The datasets used an/or analyzed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- EPB:

-

Evidence-Based Practice

- PBHP:

-

Professional bachelor-degree healthcare programs

References

Mazurek Melnyk B, Fineout-Overholt E. Making the Case for Evidence-Based Practice and Cultivalting a Spirit of Inquiry. I: Mazurek Melnyk B, Fineout-Overholt E, redaktører. Evidence-Based Practice in Nursing and Healthcare A Guide to Best Practice. 4. ed. Wolters Kluwer; 2019. p. 7–32.

Tilson JK, Kaplan SL, Harris JL, Hutchinson A, Ilic D, Niederman R, et al. Sicily statement on classification and development of evidence-based practice learning assessment tools. BMC Med Educ. 2011;11(78):1–10.

Connor L, Dean J, McNett M, Tydings DM, Shrout A, Gorsuch PF, et al. Evidence-based practice improves patient outcomes and healthcare system return on investment: Findings from a scoping review. Worldviews Evid Based Nurs. 2023;20(1):6–15.

Dawes M, Summerskill W, Glasziou P, Cartabellotta N, Martin J, Hopayian K, et al. Sicily statement on evidence-based practice. BMC Med Educ. 2005;5(1):1–7.

Larsen CM, Terkelsen AS, Carlsen AF, Kristensen HK. Methods for teaching evidence-based practice: a scoping review. BMC Med Educ. 2019;19(1):1–33.

World Health Organization. Nurse educator core competencies. 2016 https://apps.who.int/iris/handle/10665/258713 Accessed 21 Mar 2023.

Saunders H, Gallagher-Ford L, Kvist T, Vehviläinen-Julkunen K. Practicing healthcare professionals’ evidence-based practice competencies: an overview of systematic reviews. Worldviews Evid Based Nurs. 2019;16(3):176–85.

Young T, Rohwer A, Volmink J, Clarke M. What Are the Effects of Teaching Evidence-Based Health Care (EBHC)? Overview of Systematic Reviews PLoS ONE. 2014;9(1):1–13.

Bala MM, Poklepović Peričić T, Zajac J, Rohwer A, Klugarova J, Välimäki M, et al. What are the effects of teaching Evidence-Based Health Care (EBHC) at different levels of health professions education? An updated overview of systematic reviews. PLoS ONE. 2021;16(7):1–28.

Copenhagen University. Bachelor in medicine. 2024 https://studier.ku.dk/bachelor/medicin/undervisning-og-opbygning/ Accessed 31 Jan 2024.

Ministery of Higher Education and Science. Professional bachelor programmes. 2022 https://ufm.dk/en/education/higher-education/university-colleges/university-college-educations Accessed 31 Jan 2024.

Patelarou AE, Mechili EA, Ruzafa-Martinez M, Dolezel J, Gotlib J, Skela-Savič B, et al. Educational Interventions for Teaching Evidence-Based Practice to Undergraduate Nursing Students: A Scoping Review. Int J Env Res Public Health. 2020;17(17):1–24.

Wakibi S, Ferguson L, Berry L, Leidl D, Belton S. Teaching evidence-based nursing practice: a systematic review and convergent qualitative synthesis. J Prof Nurs. 2021;37(1):135–48.

Fiset VJ, Graham ID, Davies BL. Evidence-Based Practice in Clinical Nursing Education: A Scoping Review. J Nurs Educ. 2017;56(9):534–41.

Pollock M, Fernandes R, Becker L, Pieper D, Hartling L. Chapter V: Overviews of Reviews. I: Higgins J, Thomas J, Chandler J, Cumpston M, Li T, Page M, et al., editors. Cochrane Handbook for Systematic Reviews of Interventions version 62. 2021 https://training.cochrane.org/handbook Accessed 31 Jan 2024.

Page MJ, McKenzie JE, Bossuyt PM, Boutron I, Hoffmann TC, Mulrow CD, m.fl. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ. 2021;372:1-9

Covidence. Covidence - Better systematic review management. https://www.covidence.org/ Accessed 31 Jan 2024.

Shea BJ, Reeves BC, Wells G, Thuku M, Hamel C, Moran J, et al. AMSTAR 2: a critical appraisal tool for systematic reviews that include randomised or non-randomised studies of healthcare interventions, or both. BMJ. 2017;21(358):1–9.

Joanna Briggs Institute. Critical Appraisal Tools. https://jbi.global/critical-appraisal-tools Accessed 31 Jan 2024.

Horntvedt MT, Nordsteien A, Fermann T, Severinsson E. Strategies for teaching evidence-based practice in nursing education: a thematic literature review. BMC Med Educ. 2018;18(1):1–11.

Ramis M-A, Chang A, Conway A, Lim D, Munday J, Nissen L. Theory-based strategies for teaching evidence-based practice to undergraduate health students: a systematic review. BMC Med Educ. 2019;19(1):1–13.

Rethlefsen ML, Kirtley S, Waffenschmidt S, Ayala AP, Moher D, Page MJ, et al. PRISMA-S: an extension to the PRISMA Statement for Reporting Literature Searches in Systematic Reviews. Syst Rev. 2021;10(1):1–19.

Song CE, Jang A. Simulation design for improvement of undergraduate nursing students’ experience of evidence-based practice: a scoping-review protocol. PLoS ONE. 2021;16(11):1–6.

Cui C, Li Y, Geng D, Zhang H, Jin C. The effectiveness of evidence-based nursing on development of nursing students’ critical thinking: A meta-analysis. Nurse Educ Today. 2018;65:46–53.

Ghaffari R, Shapoori S, Binazir MB, Heidari F, Behshid M. Effectiveness of teaching evidence-based nursing to undergraduate nursing students in Iran: a systematic review. Res Dev Med Educ. 2018;7(1):8–13.

Wu Y, Brettle A, Zhou C, Ou J, Wang Y, Wang S. Do educational interventions aimed at nurses to support the implementation of evidence-based practice improve patient outcomes? A systematic review. Nurse Educ Today. 2018;70:109–14.

Ramis MA, Chang A, Nissen L. Undergraduate health students’ intention to use evidence-based practice after graduation: a systematic review of predictive modeling studies. Worldviews Evid Based Nurs. 2018;15(2):140–8.

Albarqouni L, Hoffmann T, Straus S, Olsen NR, Young T, Ilic D, et al. Core competencies in evidence-based practice for health professionals: consensus statement based on a systematic review and Delphi survey. JAMA Netw Open. 2018;1(2):1–12.

Hitch D, Nicola-Richmond K. Instructional practices for evidence-based practice with pre-registration allied health students: a review of recent research and developments. Adv Health Sci Educ Theory Pr. 2017;22(4):1031–45.

Acknowledgements

The authors would like to acknowledge research librarian Rasmus Sand for competent support in the development of literature search strategies.

Funding

This work was supported by the University College of South Denmark, which was not involved in the conduct of this study.

Author information

Authors and Affiliations

Contributions

All authors have made substantial contributions to the conception and design of the study, acquisition of data, analysis, and interpretation of data, writing the main manuscript, preparing figures and tables and revising the manuscripts.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

Not applicable.

Consent for publication

Not applicable.

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/. The Creative Commons Public Domain Dedication waiver (http://creativecommons.org/publicdomain/zero/1.0/) applies to the data made available in this article, unless otherwise stated in a credit line to the data.

About this article

Cite this article

Nielsen, L.D., Løwe, M.M., Mansilla, F. et al. Interventions, methods and outcome measures used in teaching evidence-based practice to healthcare students: an overview of systematic reviews. BMC Med Educ 24, 306 (2024). https://doi.org/10.1186/s12909-024-05259-8

Received:

Accepted:

Published:

DOI: https://doi.org/10.1186/s12909-024-05259-8